Explainable artificial intelligence (XAI)

Explainable artificial intelligence (XAI).

May 19, 2022 | By Yaron Shaposhnik

Predictive models have profoundly changed our lives. Whether we are applying for a loan, shopping online, searching for movie recommendations, scrolling through posts on Twitter or LinkedIn, or reading transcriptions of TV shows and voice messages, we are knowingly or unknowingly using predictive models.

What are predictive models?

Predictive models are functions that map an input, which is some form of observable information, to an output, which is a predicted value. For example, based on a patient’s symptoms (input) a model could predict a diagnosis (output). Historically, domain experts developed simple formulas for prediction based on data and using trial-and-error.

These days, however, the leading approach is computational in nature. Model designers collect data and apply simple or complex algorithms to identify patterns that map the input to the predicted output. The input can be as simple as a few numeric values (for example, a patient’s height and weight) or as complicated as text, images, voice, or time series data. This approach is so effective and has become so prevalent that in many business schools today, most graduate students have some exposure to predictive modeling. It is even considered a core competence that students are expected to master in some graduate programs.

Developing and applying predictive modeling

Developing predictive models requires an understanding of theoretical concepts, familiarity with standard techniques and best practices, and knowledge about specific programmatic or visual tools to work with data and construct models. These are essential technical skills that constitute the lion’s share of the training of practitioners, whether they are informed users or advanced model developers.

But beyond the technical aspects of developing models that can reach a desired level of performance, we must also consider the human aspect. It is people who develop models, who are expected to use them, and who are going to be impacted by following recommendations and predictions generated by models. An important dimension related to the interaction of people with models is commonly referred to as interpretability, which refers to the ability of various stakeholders to understand how the model generates a prediction.

A closely related term is explainability, which captures the ability to explain specific predictions. The two terms are complementary, relating to global and local understanding of the model (how the model works in general versus how it behaves in a specific case). The lack of such capacities could hinder model adoption, user trust, and lead to unexpected predictions and algorithms’ behavior [1,2,3,4].

Therefore, understanding models is not only beneficial but at times critical for helping designers build better models, promoting trust, and encouraging users to apply judgment when adopting these models.

How to better understand models and their predictions.

Explainable artificial intelligence, or XAI for short, aims to improve the understanding of models and their predictions. In recent years, a growing number of researchers in academia and industry have developed two schools of thought related to improving our understanding of models.

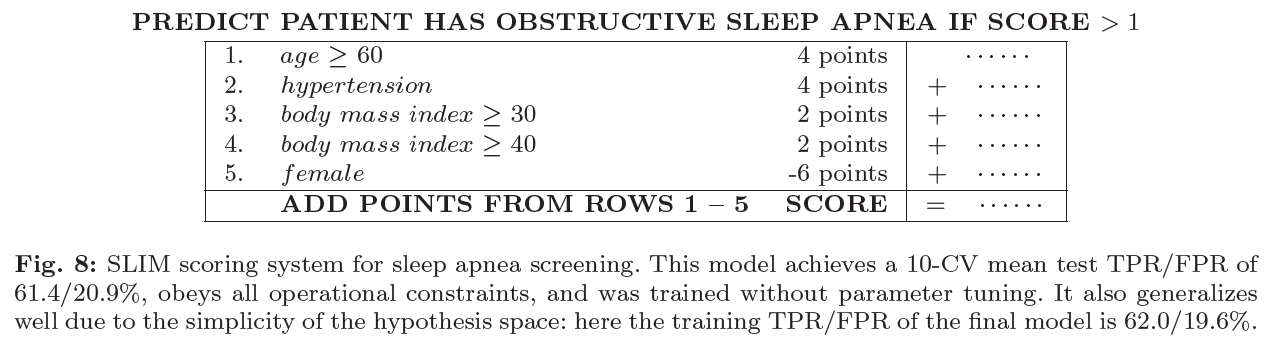

The first school of thought proposes using interpretable predictive models, which are models that were specifically designed to be interpretable (easier for stakeholders to understand). For example, SLIM is a simple linear model that “only requires users to add, subtract and multiply a few small numbers in order to make a prediction.” [5]

Figure 1 (below) displays a model that was constructed to predict the medical condition of sleep apnea. The model consists of five features. When conditions in each feature are satisfied, points are assigned and the sum of these points determines the prediction. In this example, if the sum is greater than 1, the prediction is that the medical condition exists.

Figure 1: Example of an interpretable predictive model to predict sleep apnea. Source: Berk and Rudin 2016 [5].

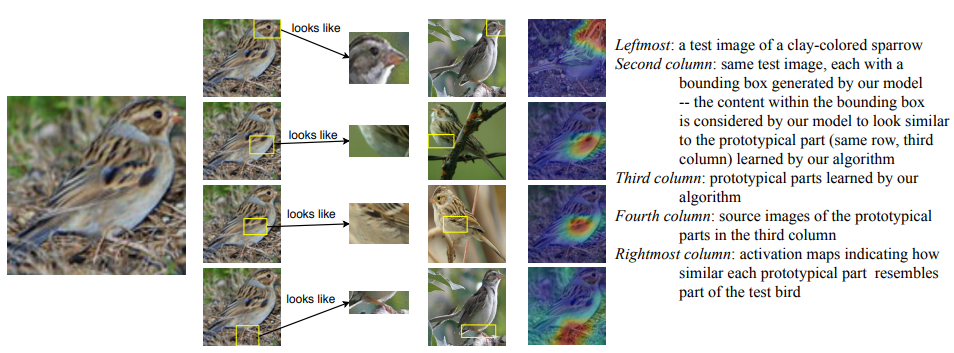

This approach of using interpretable models is not limited to simple models. Recently, a group of researchers developed an interpretable artificial neural network that can predict a bird’s species [6]. While the model itself is complex, the model identifies body parts in the image that characterize certain birds and uses them for prediction. The parts explain why the prediction was made. In Figure 2, we see that to predict the bird’s species, the model identifies the legs, chest, and eye of the bird and compares them to an existing database of images of classified birds. The user can then apply judgment and decide whether the prediction is reasonable (i.e., that the parts of the new bird indeed match the parts of the known birds). Proponents of this approach argue that is it often possible to construct a well-performing model that is competitive with other models while still being interpretable.

Figure 2: Example of an interpretable predictive model to predict bird’s specie. Source: Chen et al. 2019 [6])

The second school of thought proposes various methods for explaining predictions made by an already constructed and possibly complex model. The explanations come in different forms:

Local explanations try to provide a brief explanation relevant to the prediction. For one customer, the explanation for rejecting a loan could be based on the number of credit cards, while for a different customer, the offered explanation could relate to previous defaulting on loans.

Counterfactual explanations tell the user what should change in his/her file for the prediction of the model to change. For example, paying on time over the next year and opening an additional credit card could change the model prediction to “not default”.

Contrastive explanations tell the user why the prediction is not different.

Case-based explanations try to explain predictions by presenting previous similar cases (e.g., other similar customers and whether they defaulted on a loan or not).

Moreover, researchers also developed various visual interactive tools that help the user understand the importance of different factors in the prediction, as well as understand how models behave through interacting with the system.

The next frontier.

Have we solved the problem of how to explain a model’s predictions? Significant progress has been made on this front. Tech giants such as Microsoft and IBM released open-source packages that implemented some of the leading interpretability and explainability methods [7,8]. Other companies, such as Google, are following suit, offering services to interpret models [9]. This is on top of an array of alternative methods developed by individual researchers [10].

But there is still ground left to cover. As with any tool, interpretability methods can be a blessing or a curse. These methods can improve the understanding of users and help them make informed decisions, or on the other hand, increase compliance by making the users feel better about following models’ recommendations without a real understanding of how they work. Improperly used, these methods could also manipulate users. For example, with case-based explanations that aim to justify certain predictions by presenting previous cases, a manipulative system could cherry-pick its cases to justify arbitrary predictions. Researchers have also pointed to the fact that to generate simple explanations, some methods deliver inaccurate approximations that could backfire and harm trust [11].

In light of the real and growing need to understand predictive models better, companies, organizations, and academic institutions are investing considerable time and effort in this topic. The complexity involved in studying the development of algorithms and their connection to human behavior calls for increased interdisciplinary research between fields such as computer science, economics, business, psychology, sociology, and philosophy.

Perhaps in the next few years, we will find out which explanation methods overcame the natural selection of research and became the standard methods we all use. In the meantime, it is up to us to be mindful of the implication of using and creating predictive models.

Yaron Shaposhnik is an assistant professor of Operations Management and Information Systems at Simon Business School. His research focuses on developing, applying, and studying predictive models to improve decision-making.

Follow the Dean’s Corner blog for more expert commentary on timely topics in business, economics, policy, and management education. To view other blogs in this series, visit the Dean's Corner Main Page.